Summary

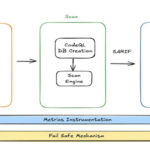

The article outlines the shift from traditional AI models to agentic systems, which can reason, plan, and interact with tools, necessitating a new approach to infrastructure. It details an AI-ready infrastructure architecture comprising an API Gateway (FastAPI), Agent Orchestrator (LangChain), Vector Store (Qdrant), Tooling Layer, Model Gateway, Infrastructure Layer (Terraform + Kubernetes), Observability Layer, and Secrets/Config management. The guide provides practical steps for building such a system, including installing dependencies, initializing LLMs with production-safe defaults, setting up a vector database for RAG, creating retrieval tools, building a production-ready agent with memory and multi-step planning, wrapping it in a FastAPI service, and deploying it via Kubernetes with Docker and Terraform, emphasizing the importance of observability for these complex workflows.

Why It Matters

A technical IT operations leader should read this article because it provides a comprehensive and practical blueprint for building and deploying production-grade agentic AI systems. It addresses critical infrastructure considerations like scalability, cost attribution, security boundaries, and observability, which are paramount for successful AI integration. The article's focus on cloud-native infrastructure (Kubernetes, Terraform), specific tools (LangChain, Qdrant, FastAPI), and best practices like explicit error handling, cost-efficient model usage, and structured logging offers actionable insights for designing resilient, modular, and observable AI platforms. This knowledge is crucial for leaders to anticipate and address the unique operational challenges posed by the agentic era, ensuring their organizations can effectively leverage advanced AI capabilities.